What Is a Cloud Native Application?

Cloud computing experts design and build cloud-native applications specifically to run on cloud-based infrastructure.” This implies the application makes the most of the distributed computing model to speed up, adaptability, and quality while decreasing organization chances. Not at all like conventional applications that are worked to run on unambiguous equipment in a particular server farm, cloud-local applications are worked to run anyplace.

Cloud-native applications are composed of small, independent, and approximately coupled administrations called microservices. These microservices can be created, sent, and scaled freely, which provides you with a degree of deftness and adaptability that is difficult to accomplish with customary solid applications.

In this article, you will learn:

- Features of Cloud Native Applications

- Cloud-Native vs. Cloud-Based Apps

- Benefits of Cloud Native Applications

- Cloud Native Infrastructure Flavors

- Does Cloud Native Introduce a Cultural Change in Organizations?

- Challenges of Cloud Native Apps

- Best Practices for Cloud-Native Application Development

Features of Cloud Native Applications

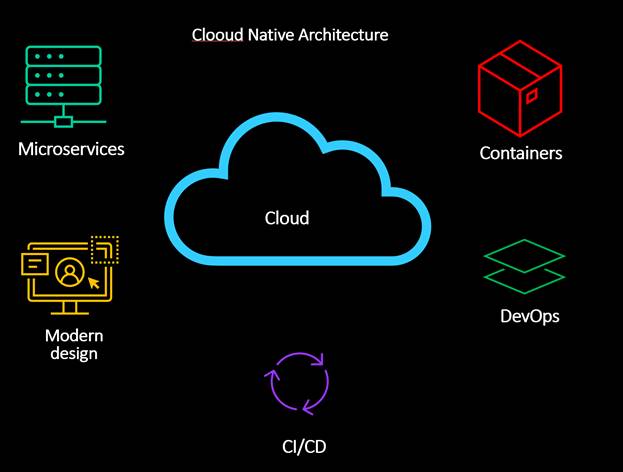

A cloud-native application is designed to run on a cloud-native infrastructure platform with the following four key traits:

- Cloud-native applications are resilient. Resiliency is achieved when failures are treated as the norm rather than something to be avoided. The application takes advantage of the dynamic nature of the platform and should be able to recover from failure.

- Cloud-native applications are agile.

- Cloud-native applications are operable. An operable application is not only reliable from the end-user’s point of view but also from the vantage of the operations team. An acceptable software application operates without needing application restarts server reboots, or hacks and workarounds that are required to keep the software running.

- Cloud-native applications are observable. Observability provides answers to questions about the application state. Operators and engineers should not need to make conjectures about what is going on in the application. Application logging and metrics are key to making this happen.

The above list suggests that cloud-native applications impact the infrastructure that would be necessary to run such applications. Many responsibilities that have been traditionally handled by infrastructure have moved into the application realm.

Cloud-Native vs. Cloud-Based Apps

There is a key difference between cloud-native and cloud-based applications. While the two kinds of utilizations are facilitated on the cloud, they vary essentially in engineering, improvement strategy, and functional standards.

Cloud-based applications are customary or inheritance applications that have been moved to the cloud. They don’t make the most of the cloud’s capacities since they were not at first intended for the cloud climate. They work more like an occupant in a leased condo, utilizing the space but not utilizing the maximum capacity of the framework.

On the other hand, cloud-native applications are designed and built from the earliest stage to make the most of the cloud’s adaptability, versatility, and adaptability. They use administrations and elements that are accessible just in the cloud climate, for example, auto-scaling and self-mending.

Benefits of Cloud Native Applications

Cost-Effective

With a cloud-native application, you can use the cloud provider’s infrastructure and just compensation for what you use. This implies that you can begin little and scale your application as the business develops, without critical forthright speculations.

Moreover, the microservices architecture allows more productive asset use. Each help can be scaled freely, so you don’t squander assets on pieces of your application that don’t require them. This can prompt huge expense reserve funds over the long haul.

Independently Scalable

In a traditional application, scaling usually involves investing in more powerful servers or adding more servers to your infrastructure. This can be costly and tedious, and it frequently prompts overprovisioning, as the need might arise to guarantee that you have an adequate number of assets to deal with top burdens.

In contrast, cloud-native applications are designed to be scalable from the earliest stage. Every microservice can be scaled freely, so you can designate assets unequivocally where they’re required. You can handle high loads without overprovisioning and scale down your app when needed to save costs.

Easy to Manage

Traditional applications can be complex and difficult to manage, especially as they grow and evolve. You want to guarantee that all parts are working accurately, handle updates and fixes, and manage any issues that emerge.

Cloud-native applications are designed to be easier to manage. Every microservice is a different interaction, so you can refresh, fix, or investigate it freely from the others. This makes the board a lot easier and less tedious. You can utilize coordination stages like Kubernetes to computerize arrangement, scaling, and the executive’s undertakings.

Portability

Cloud-native applications are highly portable. This is because they are often platform-agnostic. You can run your application on any cloud provider’s infrastructure, or even move it between providers if necessary. This gives you a lot of flexibility.

Cloud Native Infrastructure Flavors

The ephemeral nature of the cloud demands automated development workflows that can be deployed and redeployed as needed. Cloud-native applications must be designed with infrastructure ambiguity in mind. This has led developers to rely on tools, like Docker, to provide a reliable platform to run their applications on without having to worry about the underlying resources. Influenced by Docker, developers have built applications with the microservices model, which enables highly focused, yet loosely coupled services that scale easily with demand.

Containerized Applications

Application containerization is a rapidly developing technology that is changing the way developers test and run application instances in the cloud.

The principal benefit of application containerization is that it provides a less resource-intensive alternative to running an application on a virtual machine. This is because application containers can share computational resources and memory without requiring a full operating system to underpin each application.

Application containers house all the runtime components that are necessary to execute an application in an isolated environment, including files, libraries, and environment variables. With today’s available containerization technology, users can run multiple isolated applications in separate containers that access the same OS kernel.

Serverless Applications

In the cloud computing world, it is often the case that the cloud provider provides the infrastructure necessary to run the applications to the users. The cloud provider takes care of all the headaches of running the server, dynamically managing the resources of the machine, etc. It also provides auto scalability, which means the serverless applications can be scaled based on the execution load they are exposed to. All these are done so that the user can solely focus on writing the code and leave worrying about these tasks to the cloud provider.

Serverless applications, also known as Function-as-a-Service or FaaS, are an offering from most of the enterprise cloud providers in which they allow the users to only write code, and the infrastructure behind the scenes is managed by them. “Developers write multiple functions to implement business logic, and then integrate these functions to enable communication between them, resulting in a serverless architecture that leverages this model.”

Does Cloud Native Introduce a Cultural Change in Organizations?

Cloud Native is more than a tool set. It is a full architecture, a philosophical approach to building applications that take full advantage of cloud computing. In this context, culture is how individuals in an organization interact, communicate, and work with each other.

In short, culture is the way your enterprise goes about creating and delivering your service or product. If you have the perfect set of cloud platforms, tools, and techniques yet go about using them wrong—if you apply the right tools, only in the wrong culture—it’s simply not going to work. At best you’ll be functional but far from capturing the value that the system you’ve just built can deliver. At worst, that system simply won’t work at all.

The three major types of culture within enterprises are Waterfall, Agile, and Cloud Native. A quick primer:

Waterfall

Agile

Organizations now adopt an iterative, feature-driven approach, delivering faster with two- to four-week sprints. “Developers and teams break down applications into functional components, working on each one from start to finish.”

Cloud Native

organizations are built to take optimum advantage of functioning in cloud technologies (clouds will look quite different in the future, but we also build to anticipate this). Small, dedicated feature teams of developers build and deploy applications rapidly, incorporating networking, security, and all necessary components into the distributed system.

Cultural awareness also grants the ability to start changing your organization from within, by a myriad of small steps all leading in the same direction. This allows you to evolve gradually and naturally alongside a rapidly changing world. “Leaders define strategy by setting a long-term direction, and then take deliberate steps towards that direction, thereby taking strategic actions.”

Challenges of Cloud Native Apps

Below we cover a few challenges, that any organization should carefully consider before, and during, the move to cloud native.

Managing a CI/CD Pipeline for Microservices Applications

Microservices applications are composed of a large number of components, each of which could be managed by a separate team, and has its development lifecycle. So instead of one CI/CD pipeline, like in a traditional monolithic app, in a microservices architecture, there may be dozens or hundreds of pipelines.

This raises several challenges:

- Low visibility over the quality of changes being introduced to each pipeline

- Limited ability to ensure each pipeline adheres to security and compliance requirements (see the following section)

- No central control over all the pipelines

- Duplication of infrastructure

- Lack of consistency in CI/CD practices – for example, one pipeline may have automated UI testing, while others may not

To address these challenges, teams need to work together to align on a consistent, secure approach to CI/CD for each microservice. At the organizational level, there should be a centralized infrastructure that provides CI/CD services for each team, allowing customization for the specific requirements of each microservice.

Cloud Native Security Challenges

Cloud-native applications present tremendous challenges for security and risk professionals:

A larger number of entities to secure

DevOps and infrastructure teams are leveraging microservices – using a combination of containers, Kubernetes, and serverless functions – to run their cloud-native applications. This growth is happening in conjunction with a constantly increasing cloud footprint. This combination leads to a larger number of entities to protect, both in production and across the application lifecycle.

Environments are constantly changing

Public and private cloud environments are constantly changing due to the rapid-release cycles employed by today’s development and DevOps teams. As enterprises deploy weekly or even daily, this presents a challenge for security personnel looking to gain control over these deployments without slowing down release velocity.

Architectures are diverse

Enterprises are using a wide-ranging combination of public and private clouds, cloud services, and application architectures. Security teams are responsible for addressing this entire infrastructure and how any gaps impact visibility and security.

Networking is based on service identity

Unlike traditional applications that use a physical or virtual machine as a stable reference point or node of a network, in cloud-native applications different components might run in different locations, be replicated multiple times, be taken down, and then get spun up elsewhere. This requires a network security model that understands the application context, the identity of microservices, and their networking requirements, and builds a zero-trust model around those requirements.

Best Practices for Cloud-Native Application Development

Utilize Infrastructure as Code

Infrastructure as Code (IaC) is a method of managing and provisioning computing infrastructure through machine-readable definition files, rather than manual hardware configuration or interactive configuration tools. IaC is a key practice for cloud-native applications, as it allows for the automation of infrastructure setup, leading to faster and more reliable deployments.

With IaC, you can characterize your framework in code documents, rendition them as you would with application code, and keep them in source control. This considers consistency across conditions, as you can utilize similar contents to set up your turn of events, testing, and creation conditions. It likewise makes it simpler to reproduce your framework, whether for the end goal of scaling or debacle recuperation.

Furthermore, IaC supports the principles of immutable infrastructure, where servers are never modified after they are deployed. If a change is required, a new server is created from a common image, and the old one is discarded. This approach reduces the inconsistencies and drifts that can occur over time, leading to more reliable and stable infrastructure.

Build Observability into Applications

Observability is crucial for maintaining the health and performance of cloud-native applications. To build observability into applications, it’s important to implement comprehensive logging, monitoring, and tracing. These elements work together to provide a holistic view of the application’s state and behavior:

- Logging captures discrete events, such as errors or transactions.

- Monitoring keeps track of metrics and trends over time.

- Tracing connects the dots between various services, making it possible to follow the path of a request through microservices.

Design for Scalability and Fault Tolerance

Designing cloud-native applications for scalability and fault tolerance is fundamental to leveraging the full benefits of the cloud.

Fault tolerance ensures that the application continues to operate correctly even when components fail.

Test Microservices Strategically

Testing microservices requires a strategic approach that accommodates their distributed nature and the independent lifecycle of each service. A robust testing strategy should include unit testing, integration testing, and end-to-end testing. Unit tests are crucial for ensuring that each microservice functions correctly in isolation. They are typically quick to run and can be integrated into the development process as part of a continuous integration pipeline.

Integration testing checks the interactions between microservices, ensuring that they communicate and function together as expected. This phase of testing can uncover issues in the interfaces and interaction patterns that might not be apparent in unit testing alone. End-to-end testing validates the entire system’s behavior and performance under a production-like scenario.

Learn More About Cloud Native Applications

Open Policy Agent: Authorization in a Cloud Native World

The Open Policy Agent (OPA) is a policy engine that automates and unifies the implementation of policies across IT environments, especially in cloud-native applications OPA, which Styra created, has received acceptance from the Cloud Native Computing Foundation (CNCF).The OPA is offered for use under an open-source license.

Learn about Open Policy Agent (OPA) and how you can use it to control authorization, admission, and other policies in cloud-native environments, with a focus on K8s.